Understanding How the EU Regulates AI (Inside my AI Law and Policy Class #21)

An EU Deep Dive, Part 2

8:55 a.m., Wednesday. Welcome back to Professor Farahany’s AI Law & Policy Class.

Pop quiz: A healthcare startup wants to launch an AI diagnostic tool in Europe. How many different laws do they need to comply with? If, after finishing class #20 on the EU AI Act, you guessed “one really comprehensive law,” congratulations! You are an optimist. The answer is actually at least eight major frameworks at the EU level alone.

The EU AI Act, the GDPR for patient data. Medical Device Regulation. Digital Services Act. Data Act. Cyber Resilience Act. NIS2 Directive. Product Liability Directive.

And that’s just EU level. Now add Italy’s new criminal penalties for AI harm. France’s biometric rules. Germany’s data localization requirements.

Take a breath and let that sink in.

This is the reality of AI regulation in Europe today. And next week, on November 19, Brussels will announce something called the Digital Omnibus—a simplification package that’s supposed to fix this.

But “simplification” might be the most aspirational word choice since Facebook rebranded as “Meta” to signal they were getting out of the social media business.

This is your seat in my class—except you get to keep your job and skip the debt. Every Monday and Wednesday, you asynchronously attend my AI Law & Policy class alongside my Duke Law students. They’re taking notes. You should be, too. And remember that live class is 85 minutes long. Take your time working through this material.

Just joining us? Start with Class 1 and work through the syllabus. These final weeks build on everything we’ve covered.

I. Three Lenses for Understanding the European Way

Before we dive into the regulatory maze, we need to understand three forces shaping everything we’re about to discuss. Think of these as the tectonic plates beneath European tech regulation.

1. The Brussels Effect in Reverse

For twenty years, Europe has been exporting its regulations globally. Companies worldwide comply with GDPR not because they love privacy, but because they want access to European markets. Europe regulated, the world followed.

But now? We’re seeing something new. The Draghi report landed like a bomb in September. Mario Draghi, the former European Central Bank president and man who saved the Euro, basically said Europe is regulating itself into irrelevance.

Consider Mistral, founded by ex-Meta and ex-Google employees, raised $640 million in a mix of equity and debt (on a $6.2 billion valuation). That’s impressive! Until you realize that at about the same time, OpenAI raised $8.3 billion at a $300 billion valuation. That’s not just a gap. It’s a chasm that’s growing.

2. The Precautionary Principle Under Stress

Europe’s regulatory philosophy has always been precautionary. In the U.S., we say “innocent until proven guilty.” In Europe, technology is “dangerous until proven safe.”

This made sense for nuclear power and genetically modified organisms for them. Does it make sense for AI that evolves faster than regulatory processes can assess it?

The leaked documents about the proposed simplification suggests that Europe might be shifting gears, in an attempt to “reduce administrative costs and reporting.” But … how do you do that without yielding on the precautionary principle? This graphic from European Council helps underscore the point:

So far, each proposed change seems to be a small retreat from precaution toward pragmatism. So is Europe is abandoning its principles or adapting them to reality?

Remember, every regulatory choice embeds values. What values are you choosing?

3. The Integration Paradox

Here’s a fun paradox. The more Europe tries to harmonize its laws, the more complex they get.

The EU AI Act was supposed to provide clarity. One law for all AI across Europe. Instead, it created what the European Parliament’s own study calls a complex interplay between the GDPR (data protection), the Digital Services Act (platform rules), the Data Act (data sharing), the Cyber Resilience Act (cybersecurity), the Product Liability Directive (product safety laws), and now Italy’s national AI law on top of all that.

Hold onto your answer. We’ll come back to it.

II. The Architecture of European AI Governance (A Visual Exercise)

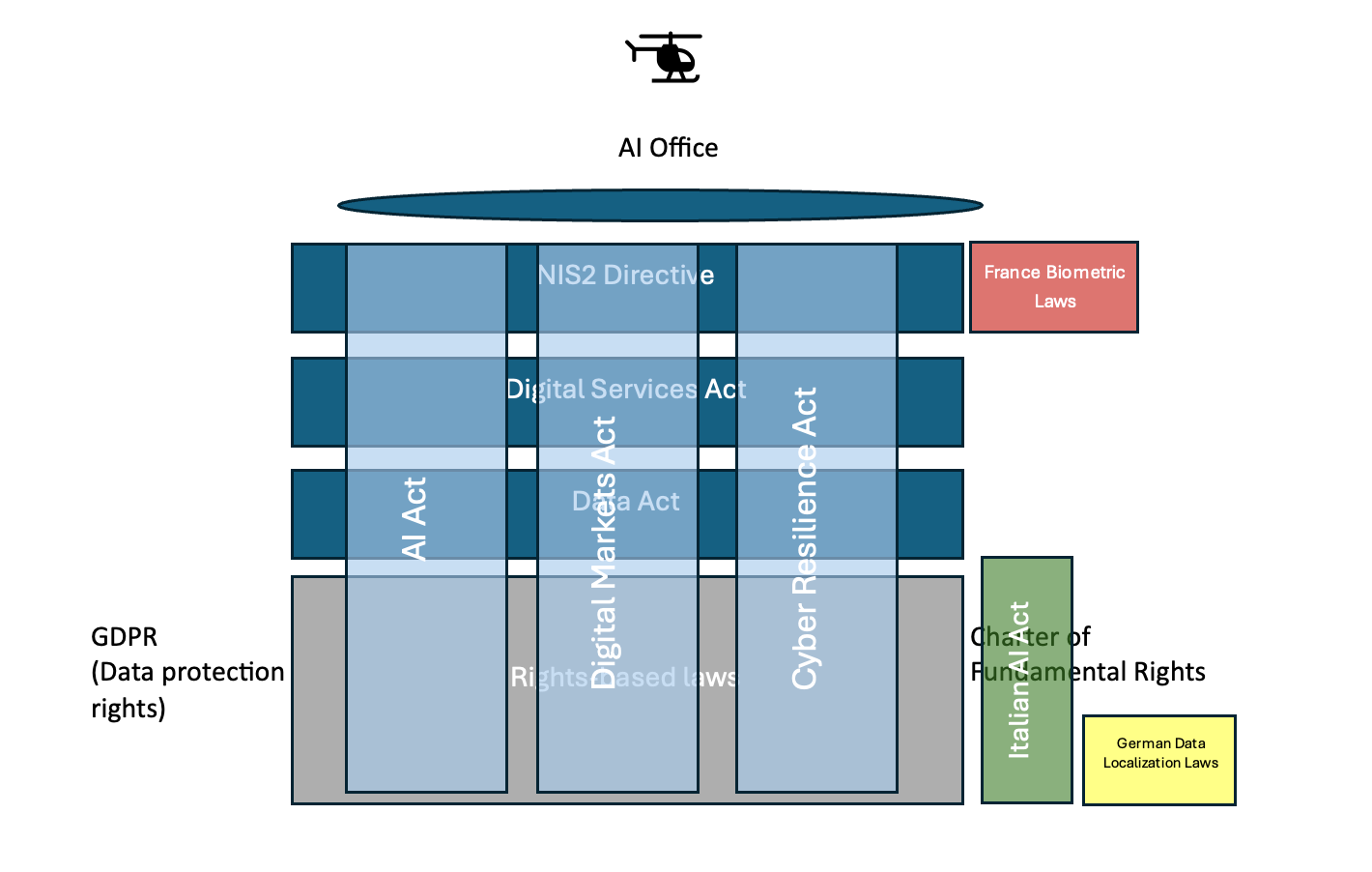

Let’s try to map the EU regulatory architecture, to understand where simplification fits in, and whether the simplification represents a shift in EU values in regulating technology.

It’s a tangled web of laws, so I want to try to build a visual representation with you.

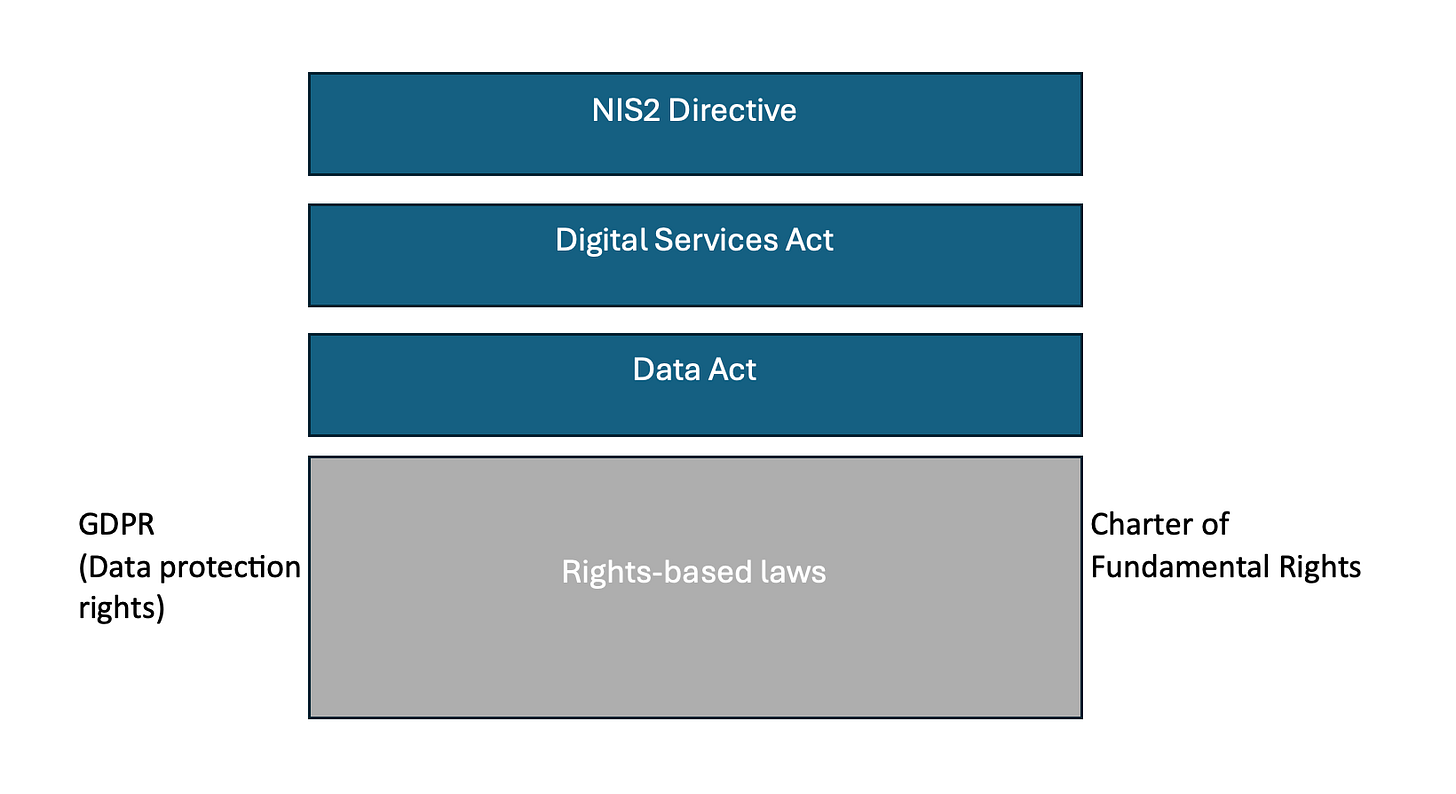

The Foundation

Let’s start with the foundation of EU laws, which are rights-based laws. These are constitutional—almost impossible to change.

Think of this as the bedrock pound in the aftermath of World War II and surveillance states. It’s why Europe regulates differently than Silicon Valley. Silicon Valley asks, What can we build? Europe asks: What should we be allowed to build?

Here we have the GDPR (General Data Protection Regulation), which became effective in 2018 that gives individuals control over their personal data as a cornerstone of European privacy law and human rights law. If a company collects, processes, or stores your data, such as your name, email, location, browsing history, the GDPR says they need a legal basis, must protect it, and must let you access, correct, or delete it. (Fun fact: that’s why you have banner everywhere you visit, but the simplification might address that).

We also have the EU Charter of Fundamental Rights, which is the constitutional-level document that protects human dignity, prohibits discrimination, and guarantees fundamental freedoms. Think of it as Europe’s Bill of Rights, enshrining certain rights for EU citizens and residents into EU law. Although proclaimed in 2000, it took full effect with the Treaty of Lisbon in 2009.

This foundation means Europe regulates rights first, and innovation second.

The Horizontal Floors

These are cross-cutting regulations that affect everyone:

First floor: Data Act (how data moves)

The Data Act (2024) governs who can access data generated by connected products and IoT devices, giving users data portability and access rights for your connected device data. Does your smart car generates data about your driving patterns? The Data Act says you (the user) should be able to access that data, share it with third-party repair shops, and switch to a competitor. Your data, your choice (but good luck figuring out how to exercise your rights).

Second floor: Digital Services Act (how platforms operate)

The DSA (2024) sets rules for online platforms (e.g. social media, marketplaces, search engines). It requires platforms to remove illegal content quickly, be transparent about content moderation, and conduct risk assessments if they’re very large (VLOPs/VLOSEs—over 45 million EU users). Think of it as the rulebook for how online platforms must behave, creating a tiered regulatory approach where the highest burden is placed on the Very Large Online Platforms (VLOPs) and Very Large Online Search Engines (VLOSEs). (The bigger you are, the more Brussels watches you).

Third floor: NIS2 Directive (how to secure everything)

NIS2 (Network and Information Security Directive, 2024) requires critical sectors (like energy, healthcare, finance, digital infrastructure), to have cybersecurity capabilities and report incidents within 24 hours. (If hackers can shut down hospitals or power grids through your system, Europe wants to hear about it. Quickly!)

So here’s where we are so far. Regulatory jenga. Remove the wrong piece and it all falls down.

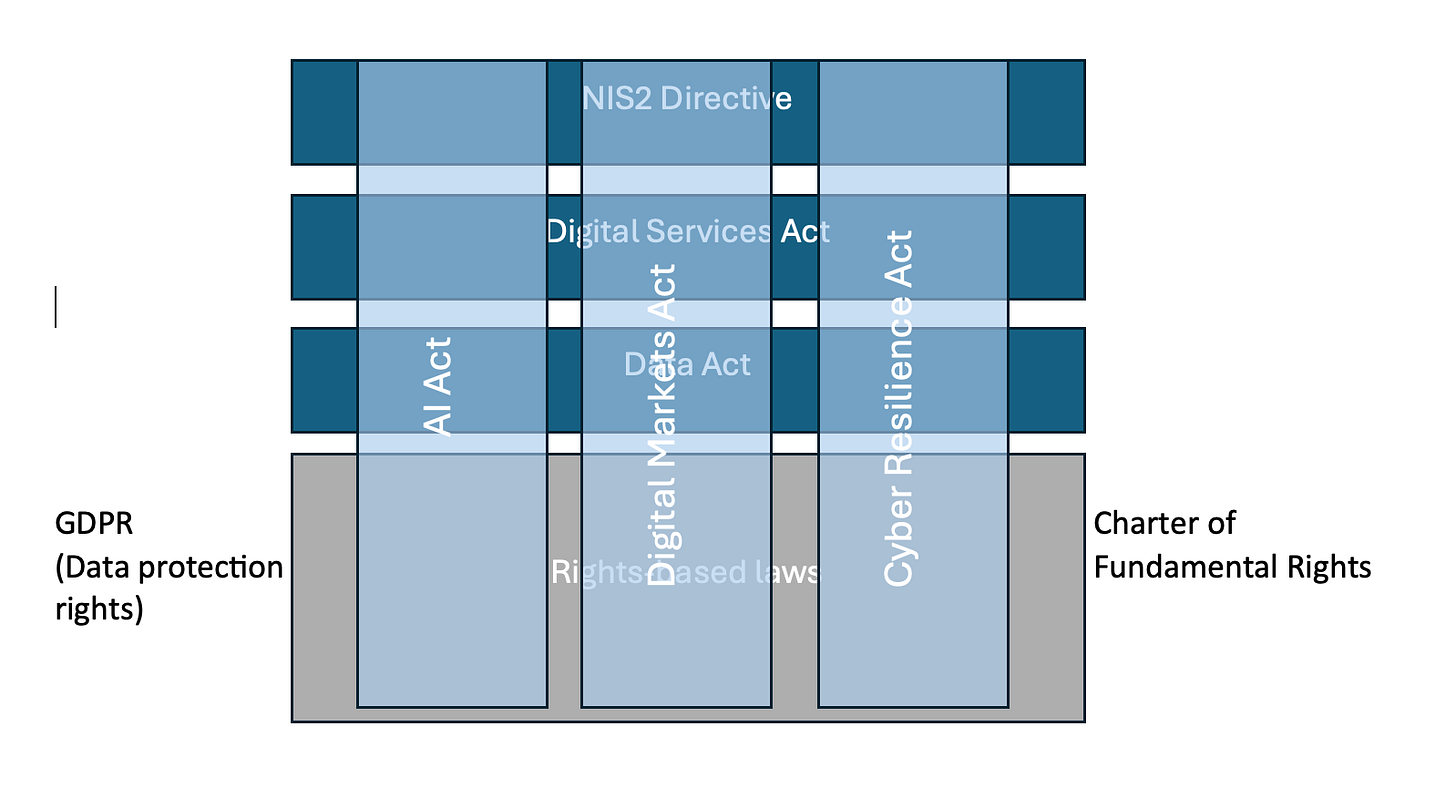

The Vertical Pillars

Now come the sectoral rules—these cut vertically through everything:

Left pillar: AI Act (governing artificial intelligence specifically)

The AI Act (2024) is the world’s first comprehensive AI regulation, which we covered in class #20. It bans unacceptable AI practices (like social scoring), heavily regulates high-risk AI systems (like AI used in hiring or healthcare), requires transparency for certain AI (like chatbots), and sets special rules for powerful general-purpose AI models. It uses a risk-based approach (higher risk means stricter rules and lots more compliance requirements).

Middle pillar: Digital Markets Act (governing gatekeepers)

The DMA targets “gatekeepers” through Regulation (EU) 2022/1925 and Procedural Implementing Regulation (EU) 2023/814, which contains the main rules for the designation of gatekeepers and the implementation of the obligations and prohibitions imposed on for tech platforms like Google, Apple, Meta, Amazon. It say no self-preferencing, allow for interoperability, and give users data portability. (Think of it as antitrust enforcement for the digital age.)

Right pillar: Cyber Resilience Act (governing product security)

The CRA (2024) treats cybersecurity like product safety. Any “product with digital elements” (think smart TVs, IoT devices, software), must be designed with security by default, have no known exploitable vulnerabilities, and receive security updates. (It’s like GDPR, but for cybersecurity instead of privacy.)

Let’s look at where we are now:

Here’s where it gets fun! (and by “fun” I mean “legally complex”):

If your AI system (AI Act pillar) processes personal data (GDPR foundation), uses data from connected devices (Data Act floor), might be deployed on a platform (DSA floor), then it needs to be secure (both NIS2 floor and CRA pillar), and if it’s built by Google, it might face additional restrictions (DMA pillar).

Which means for one AI system, you may have six different laws, each with different requirements, different timelines, and different authorities you need to interact with.

Does the that healthcare startup need a compliance department bigger than their engineering team? (Who said AI is going to put lawyers out of work? Not if the EU has anything to do with it.)

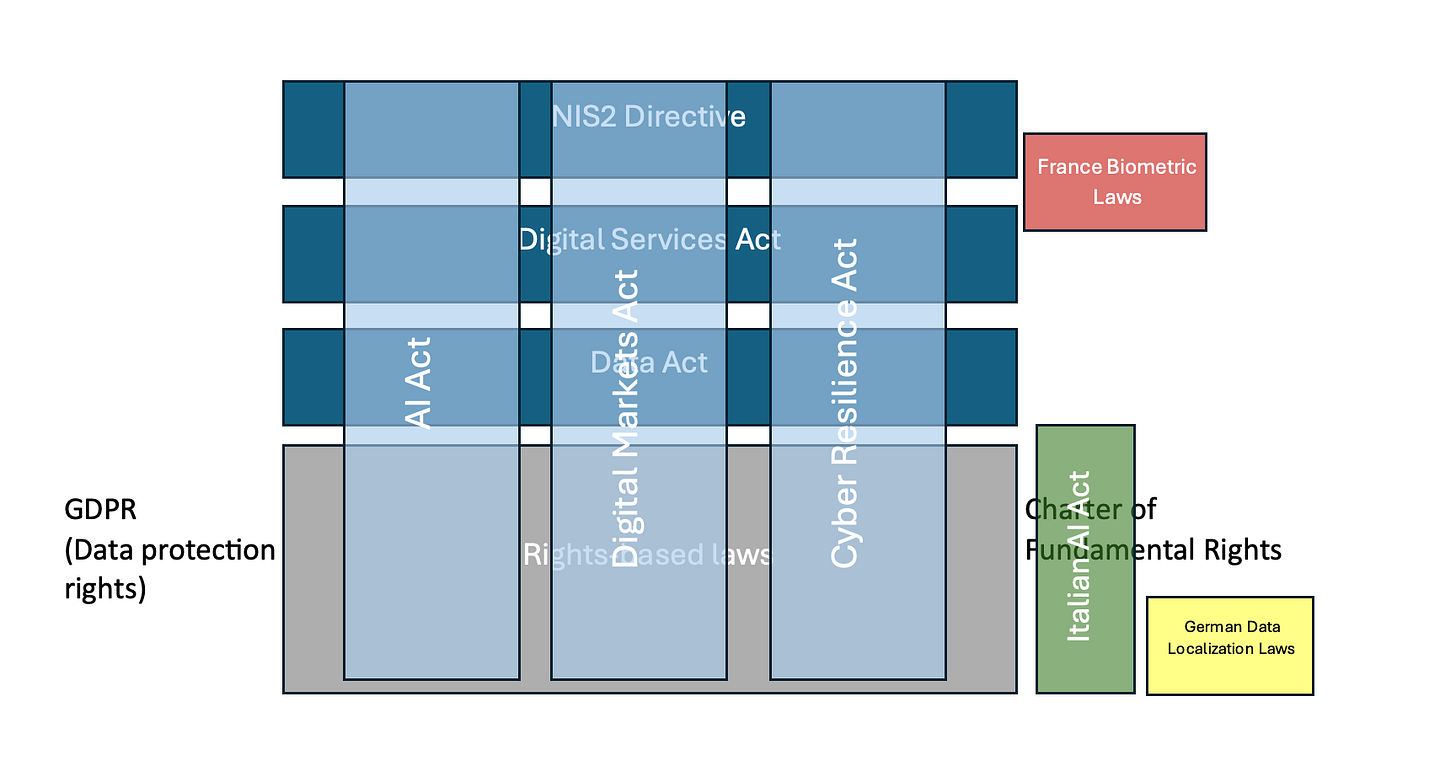

The National Additions

And just when you think you understand the architecture, member states add their own extensions, like the Italian wing to our building with its new penalties for AI harm, France’s biometric rules, and Germany’s data localization requirements.

It’s like buying a house only to discover that each room was design by a different architect who never talked to each other, and the previous owners added each extension without a permit.

Let’s see where we are now:

Oh, and where does the AI Office fit into all of this?

The AI Office is trying to be the oversight mechanism that hovers above everything, specifically for AI. It can look down into any room where AI is happening.

But they aren’t the only authorities in town! Different enforcers control different aspects of this maze, including Data Protection Authorities for the GDPR, National Market Surveillance to supervise and enforce the rules related to AI systems in the EU Member States, the Commission/AI Office for GPAI and platforms, and ENISA, which may become the new express elevator for incident reporting (if the simplification passes).

This is what companies have to navigate. Every AI system potentially touches every floor, every pillar, every national addition.

And remember, this building is still under construction. The Digital Omnibus is essentially a renovation project while people are already living in the building.

III. Seeing the Collisions

Now let’s get specific about what happens at these intersections. For today’s class, we read the European Parliament study on the interplay between the AI Act and EU digital legislation—the one commissioned by the ITRE Committee and published on October 30, 2025.

These aren’t theoretical overlaps. These are real compliance headaches documented by Parliament’s own researchers. The people who wrote the laws are now publishing studies saying, “Umm… this might be a little more complicated than we thought!”

Take a healthcare AI system, the one diagnosing patients from continuous glucose monitor data. This single system triggers a cascade of overlapping requirements.

Under the AI Act, you need a Fundamental Rights Impact Assessment (FRIA) because it’s high-risk healthcare AI.

Under GDPR, you need a separate Data Protection Impact Assessment (DPIA) for processing health data.

The study found that “these instruments differ in scope, supervision, and procedural requirements, creating duplication and uncertainty.” Different timelines, different authorities, different documentation, where a a 30-person startup needs expertise in both or hires consultants for both.

And then it starts to get philosophically impossible. The AI Act requires detailed logging for auditing, while GDPR demands data minimization. So which is it? Keep detailed logs or minimize data retention. Yes.

And then there’s the erasure problem:

If someone invokes their right to erasure (right to be forgotten) under GDPR Article 17, can you even delete training data from a neural network without retraining it entirely? If you retrain, is it still the same system that passed AI Act conformity assessment?

The legal frameworks don’t answer this.

As a “data holder” under the Data Act (because you’re using connected device data), you must also provide data access on request, enable portability, and handle third-party access requests, all while complying with the AI Act’s requirement that training data be “relevant, sufficiently representative, and free of errors” and GDPR’s cross-border transfer restrictions.

The reporting requirements reveal the system’s true dysfunction.

When a serious incident occurs at that hospital using your AI, you face five different reports with five different deadlines to five different authorities:

A GDPR personal data breach (72 hours to the Data Protection Authority)

A NIS2 significant incident (24 hours to the CSIRT)

An AI Act serious incident (15 days to market surveillance), etcetera.

Miss a deadline or get the sequence wrong, and you’re facing penalties under each framework. Who’s writing these reports at a 30-person startup? Your two engineers trying to fix the actual incident? Your CEO managing the crisis?

Ok, now be honest. I won’t tell Brussels:

IV. What Simplification Reveals About Europe’s AI Dilemma

The simplification package is like an X-ray—it shows us where the stress fractures are. But more importantly, the very fact that Brussels is scrambling to simplify regulations that haven’t even fully taken effect yet tells us something about the moment Europe finds itself in.

First, the competitiveness crisis is real. When Mario Draghi publishes a report saying that Europe’s regulatory burden is “hampering innovation and growth,” Brussels can’t ignore it.

This isn’t some Silicon Valley libertarian complaining about regulation. This is Mario Draghi. The man who literally saved the Euro. And he’s saying, “Houston, we have a problem.”

The AI Act was supposed to give Europe a “first-mover advantage” in trustworthy AI. Instead, it risks cementing a “first-regulator disadvantage” where European innovation gets strangled while American and Chinese AI companies operate with fewer constraints.

Second, the complexity is suffocating. The European Parliament’s own study documented that the regulatory overlaps are unmanageable.

Five different incident reports.

Separate impact assessments under multiple frameworks.

Conflicting requirements about data retention.

This isn’t just inconvenient; it’s a fundamental misalignment between how EU law is made (slowly, through negotiated compromises between 27 member states with different legal traditions) and how AI technology evolves (rapidly, with capabilities emerging faster than regulatory processes can adapt).

Third, member states aren’t waiting. Italy passed its own comprehensive AI law in October 2025 with criminal penalties and workplace surveillance rules beyond what the AI Act requires. Other countries are watching. If every member state adds its own layer—whether stricter protections or looser innovation zones—the “Digital Single Market” fragments into 27 different AI regimes. This defeats the entire point of EU-level harmonization. But centralization has its own problems. The AI Office is a department within the Commission, not an independent agency with democratic legitimacy.

Fourth, European values are under pressure. Here’s the real question. Can you be both the most protective AND the most competitive? Can you maintain the world’s strongest fundamental rights framework while also fostering the world’s most dynamic AI ecosystem?

The AI Act is as much a statement of who Europe wants to be as it is practical regulation. It says: we reject surveillance capitalism, we reject social scoring, we reject AI systems that manipulate or discriminate, even if that makes us less “competitive” in a narrow economic sense.

But what if protecting those values makes you globally irrelevant?

If Europe maintains its protective approach, does it condemn itself to being a rule-taker rather than a rule-maker? Is Europe protecting its values, or protecting European values into irrelevance?

The simplification is Europe’s live experiment in threading this needle.

V. Wrapping Up

As we’ll see in the weeks ahead, China has efficiency. Silicon Valley has innovation.

Europe has... process. Lots of process.

But maybe, and hear me out here, maybe process is Europe’s superpower.

Maybe the fact that it takes four years to pass a law and four months to realize it needs fixing is a feature, not a bug. It shows a system capable of learning, adapting, correcting course.

Maybe the complexity we’ve been grappling with all class, including the overlaps, the duplication, the five-report nightmare, is the inevitable result of trying to regulate AI democratically. Maybe the tension is the point.

There’s no perfect approach to regulating AI. There are only choices, embedded in law, revealed through revision, and judged by history.

P.S. If you made it this far and answered all the polls, you’re exactly the kind of person who would thrive (or at least survive) navigating EU AI relegation. Consider that career path carefully. The lawyers are expensive for a reason.

Class dismissed.

Your Homework:

Watch for the November 19 announcement. But more importantly, watch what happens after—because the simplification package, whatever its final form, is just the beginning of Europe’s long journey to govern artificial intelligence.

Share this post. It took a LONG time to write.

The entire class lecture is above, but for those who want peek at today’s whiteboard, find this work valuable, or just want to support me (THANK YOU!), please upgrade to paid.

Paid subscribers also get access class readings packs, archives, virtual chat-based office-hours, and one live Zoom class session.

Keep reading with a 7-day free trial

Subscribe to Thinking Freely with Nita Farahany to keep reading this post and get 7 days of free access to the full post archives.